|

The Data Warehousing Institute (TDWI™) 2.0 |

As is frequently the case, I was moved to write this piece by a discussion on LinkedIn.com. This time round, the group involved was The Data Warehousing Institute (TDWI™) 2.0 and the thread, entitled Is one version of the truth attainable?, was started by J. Piscioneri. I should however make a nod in the direction of an article on Jim Harris’ excellent Obsessive-Compulsive Data Quality Blog called The Data Information Continuum; Jim also contributed to the LinkedIn.com thread.

Standard note: You need to be a member of both LinkedIn.com and the group mentioned to view the discussions.

Introduction

Here are a couple of sections from the original poster’s starting comments:

I’ve been thinking: is one version of the truth attainable or is it a bit of snake oil? Is it a helpful concept that powerfully communicates a way out of spreadmart purgatory? Or does the idea of one version of the truth gloss over the fact that context or point of view are an inherent part of any statement about data, which effectively makes truth relative? I’m leaning toward the latter position.

[…]

There can only be one version of the truth if everyone speaks the same language and has a common point of view. I’m not sure this is attainable. To the extent that it is, it’s definitely not a technology exercise. It’s organizational change management. It’s about changing the culture of an organization and potentially breaking down longstanding barriers.

Please join the group if you would like to read the whole post and the subsequent discussions, which were very lively. Here I am only going to refer to these tangentially and instead focus on the concept of a single version of the truth itself.

Readers who are not interested in the ellipitcal section of this article and who would instead like to cut to the chase are invited to click here (warning there are still some ellipses in the latter sections).

A [very] brief and occasionally accurate history of truth

I have discovered a truly marvellous proof of the nature of truth, which this column is too narrow to contain.

— Pierre de Tomas (1637)

Instead of trying to rediscover M. Tomas’ proof, I’ll simply catalogue some of the disciplines that have been associated (rightly or wrongly) with trying to grapple with the area:

- Various branches of Philosophy, including:

- Metaphysics

- Epistemology

- Ethics

- Logic

- History

- Religion (or more perhaps more generally spirituality)

- Natural Science

- Mathematics

- and of course Polygraphism

Given my background in Pure Mathematics the reader might expect me to trumpet the claims of this discipline to be the sole arbiter of truth; I would reply yes and no. Mathematics does indeed deal in absolute truth, but only of the type: if we assume A and B, it then follows that C is true. This is known as the axiomatic approach. Mathematics makes no claim for the veracity of axioms themselves (though clearly many axioms would be regarded as self-evidently true to the non-professional). I will also manfully resist the temptation to refer to the wrecking ball that Kurt Gödel’s took to axiomatic systems in 1931.

I have also made reference (admittedly often rather obliquely) to various branches of science on this blog, so perhaps this is another place to search for truth. However the Physical sciences do not really deal in anything as absolute as truth. Instead they develop models that approximate observations, these are called scientific theories. A good theory will both explain aspects of currently observed phenomena and offer predictions for yet-to-be-observed behaviour (what use is a model if it doesn’t tell us things that we don’t already know?). In this way scientific theories are rather like Business Analytics.

Unlike mathematical theories, the scientific versions are rather resistant to proof. Somewhat unfairly, while a mountain of experiments that are consistent with a scientific theory do not prove it, it takes only one incompatible data point to disprove it. When such an inconvenient fact rears its head, the theory will need to be revised to accommodate the new data, or entirely discarded and replaced by a new theory. This is of course an iterative process and precisely how our scientific learning increases. Warning bells generally start to ring when a scientist starts to talk about their theory being true, as opposed to a useful tool. The same observation could be made of those who begin to view their Business Analytics models as being true, but that is perhaps a story for another time.

I am going to come back to Physical science (or more specifically Physics) a little later, but for now let’s agree that this area is not going to result in defining truth either. Some people would argue that truth is the preserve of one of the other subjects listed above, either Philosophy or Religion. I’m not going to get into a debate on the merits of either of these views, but I will state that perhaps the latter is more concerned with personal truth than supra-individual truth (otherwise why do so many religious people disagree with each other?).

Discussing religion on a blog is also a certain way to start a fire, so I’ll move quickly on. I’m a little more relaxed about criticising some aspects of Philosophy; to me this can all too easily descend into solipism (sometimes even quicker than artificial intelligence and cognitive science do). Although Philosophy could be described as the search for truth, I’m not convinced that this is the same as finding it. Maybe truth itself doesn’t really exist, so attempting to create a single version of it is doomed to failure. However, perhaps there is hope.

Trusting your GUT feeling

After the preceding divertimento, it is time to return to the more prosaic world of Business Intelligence. However there is first room for the promised reference to Physics. For me, the phrase “a single version of the truth” always has echoes of the search for a Grand Unified Theory (GUT). Analogous to our discussions about truth, there are some (minor) definitional issues with GUT as well.

Some hold that GUT applies to a unification of the electromagnetic, weak nuclear and strong nuclear forces at very high energy levels (the first two having already been paired in the electroweak force). Others that GUT refers to a merging of the particles and forces covered by the Standard Model of Quantum Mechanics (which works well for the very small) with General Relativity (which works well for the very big). People in the first camp might refer to this second unification as a ToE (Theory of Everything), but there is sometimes a limit to how much Douglas Adams’ esteemed work applies to reality.

For the purposes of this article, I’ll perform the standard scientific trick of a simplifying assumption and use GUT in the grander sense of the term.

Scientists have striven to find a GUT for decades, if not centuries, and several candidates have been proposed. GUT has proved to be something of a Holy Grail for Physicists. Work in this area, while not as yet having been successful (at least at the time of writing), has undeniably helped to shed a light on many other areas where our understanding was previously rather dim.

This is where the connection with a single version of the truth comes in. Not so much that either concept is guaranteed to be achievable, but that a lot of good and useful things can be accomplished on a journey towards both of them. If, in a given organisation, the journey to a single version of the truth reaches its ultimate destination, then great. However if, in an another company, a single version of the truth remains eternally just over the next hill, or round the next corner, then this is hardly disastrous and maybe it is the journey itself (and the aspirations with which it is commenced on) that matters more than the destination.

Before I begin to sound too philosophical (cf. above) let me try to make this more concrete by going back to our starting point with some Mathematics and considering some Venn diagrams.

Ordo ab chao

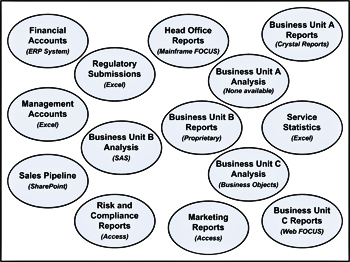

In my experience the following is the type of situation that a good Business Intelligence programme should address:

The problems here are manifold:

- Although the various report systems are shown as separate, the real situation is probably much worse. Each of the reporting and analysis systems will overlap, perhaps substantially, with one or more or the other ones. Indeed the overlapping may be so convoluted that it would be difficult to represent this in two dimensions and I am not going to try. This means that you can invariably ask the same question (how much have we sold this month) of different systems and get different answers. It may be difficult to tell which of these is correct, indeed none of them may be a true reflection of business reality.

- There are a whole set of things that may be treated differently in the different ellipses. I’ll mention just two for now: date and currency. In one system a transaction may be recorded in a month when it is entered into the system. In another it may be allocated to the month when the event actually occurred (sometimes quite a while before it is entered). In a third perhaps the transaction is only dated once it has been authorised by a supervisor.

In a multi-currency environment reports may be in the transactional currency, rolled-up to the currency of the country in which they occurred, or perhaps aggregated across many countries in a number of “corporate” currencies. Which rate to use (rate on the day, average for the month, rolling average for the last year, a rate tied to some earlier business transaction etc.) may be different in different systems, equally the rate may well vary according to the date of the transaction (making the last set of comments about which date is used even more pertinent).

- A whole set of other issues arise when you begin to consider things such as taxation (are figures nett or gross), discounts, commissions to other parties, phased transactions and financial estimates. Some reports may totally ignore these, others my take account of some but not others. A mist of misunderstanding is likely to arise.

- Something that is not drawn on the above diagram is the flow of data between systems. Typically there will be a spaghetti-like flow of bits and bytes between the different areas. What is also not that uncommon is that there is both bifurcation and merging in these flows. For example, some sorts of transactions from Business Unit A may end up in the Marketing database, whereas others do not. Perhaps transactions carried out on behalf of another company in the group appear in Business Unit B’s reports, but must be excluded from the local P&L. The combinations are almost limitless.

Interfaces can also do interesting things to data, re-labelling it, correcting (or so their authors hope) errors in source data and generally twisting the input to form output that may be radically different. Also, when interfaces are anything other than real-time, they introduce a whole new arena in which dates can get muddled. For instance, what if a business transaction occurred in a front-end system on the last day of a year, but was not interfaced to a corporate database until the first day of the next one – which year does it get allocated to in the two places?

- Finally, the above says nothing about the costs (staff and software) of maintaining a heterogeneous reporting landscape; or indeed the costs of wasted time arguing about which numbers are right, or attempting to perform tortuous (and ultimately fruitless) reconciliations.

Now the ideal situation is that we move to the following diagram:

This looks all very nice and tidy, but there are still two major problems.

- A full realisation of this transformation may be prohibitively expensive, or time-consuming.

- Having brought everything together into one place offers an opportunity to standardise terminology and to eliminate the confusion caused by redundancy. However, it doesn’t per se address the other points made from 2. onwards above.

The need to focus on what is possible in a reasonable time-frame and at a reasonable cost may lead to a more pragmatic approach where the number of reporting and analysis systems is reduced, but to a number greater than one. Good project management may indeed dictate a rolling programme of consolidation, with opportunities to review what has worked and what has not and to ascertain whether business value is indeed being generated by the programme.

Nevertheless, I would argue that it is beneficial to envisage a final state for the information architecture, even if there is a tacit acceptance that this may not be realised for years, if at all. Such a framework helps to guide work in a way that making it up as we go along does not. I cover this area in more detail in both Holistic vs Incremental approaches to BI and Tactical Meandering for those who are interested.

It is also inevitable that even in a single BI system data will need to be presented in different ways for different purposes. To take just one example, if you goal is to see how the make up of a book of business has varied over time, then it is eminently sensible to use a current exchange rate for all transactions; thereby removing any skewing of the figures caused by forex fluctuations. This is particularly the case when trying to assess the profitability of business where revenue occurs at a discrete point in the past, but costs may be spread out over time.

However, if it is necessary to look at how the organisation’s cash-flow is changing over time, then the impact of fluctuations in foreign exchange rates must be taken into account. Sadly if an American company wants to report how much revenue it has from its French subsidiary then the figures must reflect real-life euro / dollar rates (unrealised and realised foreign currency gains and losses notwithstanding).

What is important here is labelling. Ideally each report should show the assumptions under which it has been compiled at the top. This would include the exchange rate strategy used, the method by which transactions are allocated to dates, whether figures are nett or gross and which transactions (if any) have been excluded. Under this approach, while it is inevitable that the totals on some reports will not agree, at least the reports themselves will explain why this is the case.

So this is my take on a single version of the truth. It is both a) an aspirational description of the ideal situation and something that is worth striving for and b) a convenient marketing term – a sound-bite if you will – that presents a palatable way of describing a complex set of concepts. I tried to capture this essence in my reply to the LinkedIn.com thread, which was as follows:

To me, the (extremely hackneyed) phrase “a single version of the truth” means a few things:

- One place to go to run reports and perform analysis (as opposed to several different, unreconciled, overlapping systems and local spreadsheets / Access DBs)

- When something, say “growth” appears on a report, cube, or dashboard, it is always calculated the same way and means the same thing (e.g. if you have growth in dollar terms and growth excluding the impact of currency fluctuations, then these are two measures and should be clearly tagged as such).

- More importantly, that the organisation buys into there being just one set of figures that will be used and self-polices attempts to subvert this with roll-your-own data.

Of course none of this equates to anything to do with truth in the normal sense of the word. However life is full of imprecise terminology, which nevertheless manages to convey meaning better than overly precise alternatives.

More’s Utopia was never intended to depict a realistic place or system of government. These facts have not stopped generations of thinkers and doers from aspiring to make the world a better place, while realising that the ultimate goal may remain out of reach. In my opinion neither should the unlikelihood of achieving a perfect single version of the truth deter Business Intelligence professionals from aspiring to this Utopian vision.

I have come pretty close to achieving a single version of the truth in a large, complex organisation. Pretty close is not 100%, but in Business Intelligence anything above 80% is certainly more than worth the effort.

Peter,

Insightful piece!

Just remember, a truth is only true until it is shown to be untrue…..

Glenn

That’s so true Glenn :-).

Peter

Only until I show it to be untrue ;)!

Outside of BI:

I think the trouble with all discussions about the “nature of truth” and the “version of truth” and the pessimism that underpins ever truly “knowing” anything is because we are looking at the current, state-of-the-art copy of the book of knowledge.

If we looked at the clearly exponential nature of the creation/gathering of knowledge that Kurzweil talks about we should have nothing, if not optimsm about getting a more and more lucid view of the universe. This is not to say that we can expect answers in our lifetime, so a certain amount of pessimism is probably valid. However, I don’t see how we can justifiably raise concerns that truth is unknowable or relative. Even the relativity of time and space don’t, in my opinion, impinge on the possible absoluteness of truth.

To summarize:

1) No reason to doubt the absoluteness of truth

2) No reason to doubt that the exponential growth of knowledge/technology will arm us with more and more powerful means to interact/monitor the universe

3) Plenty of reason to doubt that mankind will make it far enough along the exponential curve of technology without killing itself

Thanks for the comments Ragerman,

I guess you focussed most on the elliptical middle section of the piece.

Peter

I am truly amazed by the amount time wasted by people in the past and in the present in attempting to find and define truth. I have done the same for the last 20 years and perhaps come out with a book that could clarify our confusion in this area.

If we mean by truth something that no one disputes then there is only one truth and that simply is death. About everything else there has never been a consensus. So what that means. Truth is something that can be predicted with certainty.

What about everything else? Everything else similar to predicting when one a single thing will die?

The need for a single version of truth is necessarily essential to make our predictions as close to what happens in reality.

Our inability to discover a version of truth does not in anyway diminish the importance of the single version of truth.

Unfortunately though, this society remains incapable of seeing a single version of the truth for we have clouded our minds with theories that are not right and data that represents falsehoods.

Yes! If we are prepared to unlearn all the stupid things that have been dinned in to our heads by pseudo intellectuals and false pretenders, the truth is not far to seek.

We just need to be academically honest and intellectually incorruptible.

Thanks for the comments – you realise that this post is primarily about business intelligence – right?

Peter

And speaking of the holy grail…

Peter while you have focused your comments on BI much of the challenge in obtaining “a single version of the truth” originates in an organizations operational IT systems. In most corporations of any size there a single source of accurate and reliable data is next to imposible to identify and agree upon. This is due to the number of various operational systems and various office automation products in use in most corporations. This in addition to the data semantics issues you bring up make it extrememly difficult if not impossible to have “a single verion of the truth” in a BI environment. Enterprise architecture “challenges and issues” are at the core of why this is a pursuit of the holy grail that is very difficult to obtain.

The one technology area that addressed this enterprise data architecture issue is SOA in conjunction with a middleware bus architecture to communicate data across multiple application systems and silos. Unfrotunately from my perspective most business people and corportations are not willing to take on reengineering a corporation ath the enterprise level to address business problems as large as this because the solution seems to be worse than the problem in terms of taking buckets of money and years to address. What business leader is willing to “stay the course” long enough to cast a wooden stake in the heart of a problem this large. And even if they were willing to attempt such a feat is their tenure long enough that they’ll even be able to put a dent in such a huge business problem and issue.

So ofice workers are typically left with their office automation products to create their on “version of their truth” on their desktops. How often will that ever agree with anyone else’s “version of the truth”…

So is “a single version of the truth” a Don Quixote undertaking?

Paul,

Sorry for the delay in replying. The system’s landscape that I was working in looked something like this (drastically simplified of course):

https://peterthomas.files.wordpress.com/2009/02/insurance-systems-architecture.jpg

If I focus on just policy systems, in Europe there were three of these, one covering two business units, the others a business unit each. In addition there was an old character-based system that was both interfaced to and could still be used for some types of direct entry (normally fixing mistakes). But we also had to take on board non-European policy systems that were processing European business (e.g. a policy issued in Brazil for the subsidiary of a Spanish company), which complicated things further.

Some people wanted to look at policies according to where they were issued, others according to where the master account was located, others still according to who was the customer (or group of customers) or broker (or group of brokers).

You could argue that these were different versions of the truth, but they were just the same central data sliced in different ways.

I was also tasked with Application Integration and Architecture so another exercise was to simplify the interface landscape – generally standardising on MQ Series back then.

My point is that it was a relatively messy landscape (though I have seen worse), but it was nevertheless possible to progress towards the mythical SVT.

Peter

[…] A single version of the truth? […]

Single version of the truth is closer since the newer BI tools allow us to report across disparate data sources (I would never want to try to do too much of this outside of a reporting datamarts at this point).

As the various event information is gathered from all its various ERPs and conformed to standard ‘masters’ we’ve been able to give larger and larger pictures across different facts in the past years. Unfortunately prior to the newest tools, combined reporting required messy specialized solutions.

As we showcase the ability to gather disparate transactions (different dimensions, granularity, etc) together under a single reporting solution umbrella, the business users clamor to add more. :) The main issue is streamlining the requirement and project cycle.

We can provide reporting across all of them with great performance as well as allow the lowest level of detail. The facts can remain unique from one another while still exposed in a one stop shopping spot.

You may be thinking that I’m unrealistic since what I’m talking about would take too much processing time. I’m spoiled. I get to pull already scrubbed (to warehouse structures) data from multiple areas, have augmented our datamart loads processes to run parallel, and have a GREAT database environment.

Lydia,

Thanks for your comments. Why were you not able to bring the data together (rather than having BI tools gather it from disparate places)?

Peter

Of course, we could bring the data together but normally it required gathering them in one large blob (as in the horror movie not the data type) as a single fact. What gets created is too large volume for detail info, is confusing at the detail level, very complex if any have differing security, and if combined in the same records there would be records with gaps that the user had to ‘interpret’.

Now, I’ve got a ‘new’ world where I can keep datamart transactions in their own ‘silo’ within the datamart, conform the dimensions that are common to other transactions, add new non-conformed dmns and apply specialized security if necessary. Slap on some Materialized Views and expose it in the reporting. The reporting tools bring the information together at report time.

I’m excited about the possibilities.

Thank you for the clarification.

Peter

[…] of analytics), is a greater emphasis on accuracy. If enterprise BI is to aspire to becoming the single version of the truth for an organisation, then much more emphasis needs to be placed on accuracy. For information that […]

[…] Here is the original post: A single version of the truth? « Peter Thomas – Award-winning … […]

[…] time to time, notably scientific ones such as in the middle sections of Recipes for Success?, or A Single Version of the Truth? – I was clearly feeling quizzical when I wrote both of those pieces! Sometimes these […]

A comment about facts and the truth:

Looking for the truth in numbers and records is very subjective, and people will only “see” what suits them.

For example, if you were to look at my bank account then you would see around 2004 that my sister wired me money. So most would assume one of two scenarios depending upon personal prejudices (1) either she gave it to me or (2)owed it to me. Actually, neither scenario is correct it so happens that my mother was returning money I had given her from a job I held before returning to study. Only her bank wasn’t capable of doing the transfer.

Here’s another scenario, again from a true story. If you were to look at daughter and mother’s credit history and see that the mother went bankrupt with high credit card bills while the daughter didn’t have much in the way of credit card transactions until she was much older and could afford it, then it might be natural to assume that the daughter was made of more responsible stuff than the mother, very disciplined mature ect… But if you were to ask around then you might find that the daughter lived a very extravagant life and used her parent’s money and cards to finance this, and this contributed (leather furniture ect…) to the mother’s credit card bills.

So although numbers on paper are indisputable, their interpretations depend upon the prejudices of the reader, making the whole task as subjective as judging artwork.

Thanks for taking the time to comment Anon (mind if I call you Anon?),

I sort of see what you are saying, but in both of the above scenarios you are surely saying that there was not enough information available – right?

Peter

Yes, quite frankly I do take offence at being called Anon.

Is Mousy any better?

Peter

[…] include some of my most highly-rated pieces such as Who should be accountable for data quality?, A single version of the truth? and “Why Business Intelligence projects fail”. Perhaps the fact that they related to topical […]

[…] of dynamic data. (See Evan Levy’s TDWI article, “SVT No More” and Peter J. Thomas’ blog post on the topic for some more […]

[…] A single version of the truth? […]

[…] A single version of the truth? […]

[…] A single version of the truth? […]

[…] A single version of the truth? […]

[…] A single version of the truth? […]

[…] I have got this wrong myself in these very pages, e.g. in A Single Version of the Truth?, in the section titled Ordo ab Chao. I really, really ought to know […]

[…] Part II – Map Reading or Patterns Patterns Everywhere; sometimes implicit as in Analogies or A Single Version of the Truth. These articles typically blend Business and Scientific or Mathematical ideas, seeking to find […]